The following is my talk transcript from Akademy 2012. During the talk I wavered quite a bit from this script, but in the end I managed to cover most of the major bits that I wanted to talk about. Either way, this is the complete idea that I wanted to present and the discussions can continue at other places.

Good morning. The topic for my talk this morning is Localizing software in Multi-cultural environments. Before I start, I’d like to quickly introduce myself. For most of my talks, I include a slide at the very end with my contact details. But after the intense interactive sessions I forget to mention them. I did not want to make that mistake this time. My name is Runa and I am from India. This is the first time I am here in Estonia and at Akademy. For most of my professional life, I have been working on various things related to Localization of software and technical documentation. This includes translation, testing, internationalization, standardization, also on various tools and at times I try to reach out about the importance of localization and why we need to start caring for the way it is done. That is precisely why I am here today to talk about how localization can have hidden challenges and why it is important that we share knowledge and experience on how we can solve them.

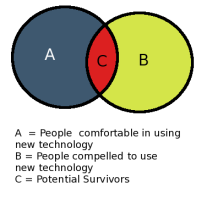

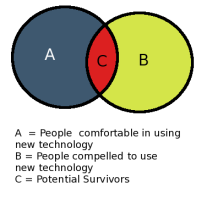

Before I start the core part of the discussion today, I wanted to touch base on why localization of software is becoming far more important now. (I was listening to most of the talks in this room yesterday. And it was interesting to note that a continuous theme that reappeared in most of the talks was about finding ways to simplify adjusting to a world of growing devices and information). These days there is a much larger dependence on our devices for communication, basic commercial needs, travel etc. These could be our owned devices or the ones at public spaces. It is often assumed to be an urban requirement, but with improvement in commun ication technology this is not particularly the case. Similarly, the other concept is that the younger generation is more accustommed to the use of these devices, but again that is changing – out of compulsion or choice.

ication technology this is not particularly the case. Similarly, the other concept is that the younger generation is more accustommed to the use of these devices, but again that is changing – out of compulsion or choice.

The other day I was watching this series on BBC about the London Underground. And there was this segment about how some older drivers who had been around for more than 40 years opted out of service and retired when some new trains were introduced and they did not feel at ease with the new system. Now I am not familiar with the consoles and cranks in the railway engines but for the devices and interfaces that we deal with, among other things localization is one major aspect that we can use to help make our interfaces easy. We owe it to the progress that we are imposing in our lives.

The reason I chose to bring this talk to this conference was primarily for the fact that it was being held here, in Europe. In terms of linguistic and cultural diversity, India by itself perhaps has as much complexities as the entire continent of Europe put together. However, individual countries and cultural groups in Europe depict a very utopian localization scenario, which may or may not be entirely correct. I bring this utopian perspective here as a quest, which I am hoping will be answered during this session through our interactions. I’ll proceed now to describe the multi-cultural environment that I and most of my colleagues in India work in.

Multi-cultural structure:

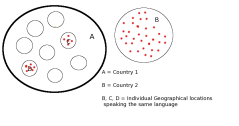

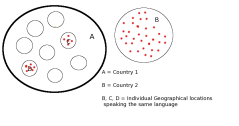

Firstly, I’d like to tell here that I do not use the term multi-cultural from any anthropological reference. Instead it is a geopolitical perspective. Present day India is divided into 28 states and 7 union territories, and the primary basis for this division is … well ‘languages’. I’d like to show you a very simple pictoral representation of how it essentially is at the ground level.

The big circle here is our country and the smaller ones are the states.Each of the states has a predominant population of the native language speakers. Some states may even have multiple state languages with equally well distributed population. At this point, I’d like to mention that India has 22 languages recognised by the Indian constitution for official purposes, with Hindi and English being considered the primary official languages. The latter being a legacy from the British era. The individual states have the freedom to choose the additional language or languages that they’d like to use for official purposes and most states do have a 3rd and sometimes 4th official language. So the chances are that if you land up at a place where Hindi is not the primary language of communication, you’d see the public signs written in a minimum of 3 languages. Going back to our picture, I have marked the people in each of these states in their distinctive colour. They have their own languages and their own regional cultures.

However, essentially that is not the status quo that is in place. So we have people moving away from their home states to other states. Why? Well, first for reasons of their employment in both government and private sector jobs. Education. Business. Defence personnel and various other common enough reasons. And given its a country we are talking about, people have complete freedom to move about without additional complications of visas or residence permits. So in reality the picture is somewhat like this.

The other multi-cultural grouping is when languages cross geographical borders. Mostly due to colonial legacy or new world political divisions and migration, some languages exist in various places across the world.

Like Spanish or French and closer home for me, my mother tongue Bengali that is spoken in both India and Bangladesh. In these cases, the languages in use often take the regional flavours and create their own distinctive identity to be recognised as independent dialects – like Brazilian Portuguese. While sometimes they do stay true to their original format to a large extent – as practised by the Punjabi or the Tamil speaking diaspora.

While discussing the localization scenario, I’ll be focusing on the first kind of multi-cultural environment i.e. multiple languages bound together by geographical factors so that they are forced to provide some symmetry in their localized systems.

Besides the obvious complexities with the diversity, how exactly does this complicate matters on the software localization front? To fully understand that, we would need to first list the kind of interfaces that we are dealing with here.

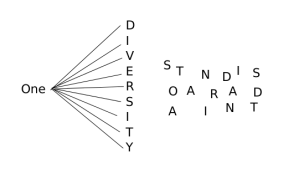

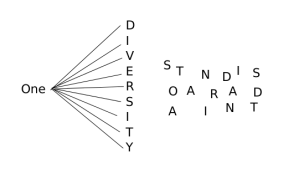

In public spaces we have things like ATM machines, bank kiosks, railway and airline enquiry kiosks, ticketing machines, while on the individual the front we have various applications on desktop computers, tablets, mobile phones, handheld devices, GPS systems etc. If you were here during the talk Sebas talk yday afternoon, the opening slide had the line devices are the new ocean. Anyways, some of these applications are of personal use, while some others may be shared, for instance, in the workplace or in educational institutes. In each of these domains, when we encounter a one to many diversity ratio the first cookie that crumbles is standardization.

Language is one of the most fundamental personal habits that people grow up with. A close competitor is a home cooked meal. Both are equally personal and people do not for a moment consider the fact that there could be anything wrong with the way they have learnt it.

Going back to the standardization part, two sayings very easily summarize the situation here, one in Hindi and the other in Bengali:

1. do do kos mein zuban badal jaati hain i.e. the dialect in this land changes in every 2 kos (about 25 miles)

2. ek desher buli onyo desher gaali i.e. harmless words in one language maybe offensive when used in another language

So there is no one best way of translating that would work well for all the languages. The immediate question that would come to mind is, why is there a need to find a one size fits all solution?

These are independent languages after all. While it does work well independently to a large extent, but there are situations where effective standardization is much in demand.

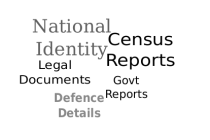

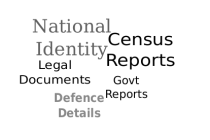

For instance, in do mains which pan across the diversity. Like national defence, census records, law enforcement and police records, national identity documents etc.

mains which pan across the diversity. Like national defence, census records, law enforcement and police records, national identity documents etc.

Complications arise not just to identify the ideal terminology but a terminology that can be quickly suited to change. The major obstacle comes from the fact that a good portion of these technonological advancements were introduced much before Indian languages were made internationalization-ready. As a result, the users have become familiar with the original english terminology in a lot of cases. There were also people who knew the terms indirectly, perhaps someone like a clerk in the office who did not handle a computer but regularly needed to collect printouts from the office printer. So when localized interfaces started to surface, besides the initial mirth, they caused quite a bit of hindrance in everyday work. Reasons ranged from non-recognition of terminology to sub-standard translations. So we often get asked the question that when English is an official language and does cut across all the internal boundaries, why do you need to localize at all? It is a justified query. Especially in a place like India, which inherited English from centuries of British rule. However, familiarity with a language is not synonymous to comfort. A good number of people in the work force or in the final user group have not learnt English as their primary language of communication. What they need is an interface that they can read faster and understand quickly to get their work done. In some cases, a transliterated content has been known to work better than a translated one.

The other critical factor comes from an inherited legacy. Before indepedence, India was dotted with princely states and kingdoms and autonomous regions. They often had their own currency and measurement systems, which attained regional recognition and made way into the language for that region. A small example here.

In Bengali, the word used to denote a currency is called Taka. So although the Indian currency is the Rupee, when it is to be denoted in Bengali the word Rupee is completely bypassed and Taka used instead. So 1 Rupee is called ek taka in Bengali. When we say Taka in Bengali in India, we mean the Indian Rupee (symbol ₹). But as an obvious choice, Taka (symbol ৳) has been adopted as the name for the currency of Bangladesh. So if a localized application related to finance or banking addresses the term for currency as Taka, a second level of check needs to be done to understand which country’s currency is being talked about here and then the calcuations are to be done. To address issues of this nature often we have translations for the same language being segregated into geographical groups, mostly based on countries.

Mono-cultural structure:

This is where I start describing the utopian dream. I do not use the word mono-cultural as an absolute, but would like to imply a predominantly mono-cultural environment. As opposed to the earlier complexity, a lot of places here in Europe are bound by homegenuity of a predominant language and culture. Due to economic stability and self-sufficiency the language of the land has been the primary mode of communication, education, and administration. There does not arise a need for a foreign language to bind the people in various parts of the country. If you know your language, you can very well survive from childhood to oldage. The introduction of new technology in localized versions completely bypassed any dependency on the initial uptake through English. Without a baggage of inherited cross-cultural legacy and bound through a commonality of technology intergrated lifestyle, the terminology was stablizied much faster for adoption. So if you knew how to use an ATM machine in one city, you could most likely be able to use another one just the same in another city. Thats probably the primary reason why various applications are translated much faster in these languages with a much higher user base. Regional differences aside, a globally acknowledged version of the language is available and not difficult to understand .

How do we deal with the problems that we face in multi-cultural places:

The first thing would probably be to accept defeat about a homegeneous terminology. It would be impractical. But that doesn’t stop one from finding suitable workarounds and tools to deal with these complexities.

1. Collaboration on translations

2. Tools that facilitate collaboration

3. Simplify the source content

4. Tools for dynamic translation functionalities

5. Learn from case studies

6. Standardize on some fronts

Collaboration on translations – When translating if you come across a term or phrase that you personally struggled to translate or think may pose a problem for other translators, it would be reasonable to leave messages on how to interpret them. Often highly technical terms or terms from a different culture/location are unknown or hard to relate to and instead of all translators searching for the term individually , a comment from another translator serves as a ready reckoner. The information that can be passed in this way are: description of the term or phrase, and how another language has translated it so that other translators of the same language or of a closely related language can identify quickly how to translate it.

Tools that facilitate collaboration – To collaborate in this manner, translators often do not have any specific tools or formats to leave their comments. So when using open source tools, translators generally have to leave these messages as ‘comments’. Which may or may not be noticed by the next translators. Instead, it is beneficial if the translation tools allow for cross-referencing across other languages as a specialized feature. I believe the proprietory translation tools do possess such features for collaboration.

Simplify the source content – However, until such features are intergrated or collaborative practices adopted, a quick win way to easier translation is to get back to the source content creators for explanations or requests to simplify their content. The original writers of the user interface messages try to leave their creative stamp on the applications. Which may include cleverly composed words, simplified words, new usage of existing words, local geographic references, colloquial slang, analogies from an unrelated field or even newly created terms which do not have parallel representations in other languages. Marta had mentioned the a similar thing yesterday during her talk – where she said that humour in commit messages should ideally be well understood by whoever is reading them. If taken as a creative pursuit, translators have the liberty to come up with their version of these creations. However, when we are looking at technical translations for quick deployments, the key factor is to make it functional. So while the translators can reach out to the content creators, the original content creators could also perhaps run a check before they write their content, to see if it will be easy to translate.

Tools for dynamic translation functionalities – Before coming here to Estonia, I had to read up some documents related to visa etc. which were not available in English. The easiest way to get a translated version of the text from German was through an online translation platform. Due to the complexity of Indian languages, automatic translations tools for them have not yet evolved to the same levels of accuracy as we can otherwise see for European languages. But availability of such tools would help benefit societies like ours, where people do move around a lot. Going back to an earlier example for a ticket booking kiosk, lets assume a person has had to move out of their home state and is not proficient in either of the two official languages or in the local language of the home-state. In such a case, our users would benefit if the application on the kiosk has a feature so that interfaces for additional languages can be generated as per requirements, either from existing translated content or dynamically. This is from interface display. The other part is to allow simplified writing applications like phonetic and transliteration keyboards for writing complex scripts quickly.

Standardize on some fronts – However, standardization is a key element that cannot be overlooked at all. As a start, terminology related to the basic functional areas where content is shared across languages need to be pre-defined so that there are no chances of discrepancy and even auto-translation functions can be quickly implemented.

Learn from case studies – And ofcourse nothing beats learning from existing scenarios of similar nature. For instance a study on how perhaps the Spanish and Italian translation teams collaborated while working on some translations may be applied somewhat effectively for languages with close similarities like Hindi and Marathi.

Conclu sion

sion

Whether in a multi-cultural environment or otherwise, localization is here to stay. With the users of various applications growing everyday, the need for their customizations and ease of use will simultaneously grow. And like any other new technology, the importance lies in making the users confident in using them. Nothing better to boost confidence than providing them with an interface that they can find their way around on.

In Agustin’s keynote yesterday afternoon, he mentioned that there is a need for patience to instill confidence during these times of fast moving technology. At a discussion some time back, someone had suggested to do away with written content on the interface and to only retain icons. Ideally, written content can never be completely removed. But yes they can be made easier to use. Sebas had shared a similar thought yesterday that technology should be made functional for user’s need and not because it was fun developing it.

A few months back the Government of India sent out a circular to its adminsitrative offices that in place of difficult Hindi words, the usage of Hinglish or a mix of English and Hindi could be used to ease the uptake of the language. I wholeheartedly shared this view and had followed up with a blog post on this where I mentioned that:

Familiar terms should not be muddled up, and Readability of the terms is not compromised,

primarily to ensure that terminology is not lost in translation when common issues are discussed across geographies, especially in the global culture of the present day that cuts across places like multinational business houses and institutes of higher education.

Next, we made a small shelf with dado joints that can be hung up on the wall. We started off with a block of rubber wood about 1’6’’ x 1’. The measurements for the various parts of this shelf was provided on a piece of paper and we had to cut the pieces using the table saw, set to the appropriate width and angle. The place where the shelves connected with the sides were chiseled out and smoothed with a wood router. The pieces were glued together and nailed. The plane and sander were used to round the edges.

Next, we made a small shelf with dado joints that can be hung up on the wall. We started off with a block of rubber wood about 1’6’’ x 1’. The measurements for the various parts of this shelf was provided on a piece of paper and we had to cut the pieces using the table saw, set to the appropriate width and angle. The place where the shelves connected with the sides were chiseled out and smoothed with a wood router. The pieces were glued together and nailed. The plane and sander were used to round the edges. The final project was an exercise for the participants to design and execute an item using a 2’ x 1’ piece of plywood. I chose to make a tray with straight edges using as much of the plywood I could. I used the table saw to cut the base and sides. The smaller sides were tapered down and handles shaped out with a drill and jigsaw. These were glued together and then nailed firmly in place.

The final project was an exercise for the participants to design and execute an item using a 2’ x 1’ piece of plywood. I chose to make a tray with straight edges using as much of the plywood I could. I used the table saw to cut the base and sides. The smaller sides were tapered down and handles shaped out with a drill and jigsaw. These were glued together and then nailed firmly in place.